by Martin Cavarga

Meta-what?

Popular 3D modeling engines such as Blender, Maxon’s Cinema 4D, or 3DSMax from Autodesk have an interesting tool to create blob-like organic looking shapes that appear to be like a dynamic membrane around geometric objects. How are they made? What objects can we use as generators of such membranes, and what parameters can we use to take advantage of this concept? All of these questions will be answered in this post about what I could easily call one of my most interesting projects.

The process of constructing such objects has a very interesting mathematical background. Even readers who did not have much opportunity or will to learn higher mathematics can grasp the concept of iso-surfaces.

If I were to explain the concept of an iso-surface or an iso-curve to an average person, I would first take them back to their time as girl or boy-scouts. Most people have probably read topographic maps like these

which depict the points with the same elevation as lying on a common line or a curve. We know that the landscape is 3-dimensional, but to draw it accurately on a 2-dimensional paper, we use contour lines (or level lines) to contain the 3D information about elevation.

What would happen if we had a 3D map of a 4D landscape? We can only draw limited representations (projections) of 4-dimensional objects. Drawing an entire map with any accuracy would be impossible because we are inherently 3-dimensional creatures, bound do 3D space.

Another way to think of a “3D map” would be to try mapping the air density in a room. We know air is made of gas molecules, but their size is so tiny that we can think of the air as a continuum made of infinitely small particles, we can see that every point in space would be assigned a single value (for example in grams per cubic centimeter). Now imagine that for some reason, there would be more air in one corner of the room, so the density would grow as we approach that point.

An iso-surface would be (just like an iso-curve on a topographic map) a set of points where the air would have the same density.

Mathematically, such density would be a function mapping each point of domain

onto a scalar value

. An iso-set would then be defined as

(iso, meaning the same in Greek). In a simple 1-dimensional case (

) an iso-set would just be a set of singular points on a line. For

it would form a contour line, and in

it would be a surface, as we can see in the image above.

We will think of functions as scalar fields (fields of value) defined everywhere on spatial domains . A “Metaball” object will just be an iso-surface of a scalar field (more precisely its triangulation).

Triangulating fields

Readers who are at least somewhat familiar with computer graphics will understand, that in order to view something like an abstract (mathematical) surface (e.g. a sphere) on a computer screen, we need to subdivide it into triangles (or other polygons). There is also a possibility of computing and displaying just visible pixels containing a surface using shaders, but I chose a path that wasn’t so alien to me at the time.

CG (computer graphics) gurus with some coding experience have probably stumbled upon a so called Marching Cubes algorithm which is almost 35-years old by now. It was first developed by a research team from General Electric to help render volume data from tomography and other medical sources.

All it needs is a grid of points (in 3D) evenly distributed in the directions of the main coordinate axes with a value stored in each of them. In order to save storage space for ordinarily-sized datasets we just need the grid dimensions , grid origin

and cell size

to fully determine the location of every grid point in space. On top of that we just need a (32-bit float) buffer array of values corresponding to each grid point.

We can then index the grid space with three independent indices ,

,

, and access any field value in the buffer using a combined grid index:

Grid points adjacent to

form a cube with edge length

. Since a cube has 8 edges, an iso-surface can intersect such cube in

distinct ways.

That is a lot of combinations to cover, but fortunately due to the spatial symmetry of a cube, all can be reduced to 20 distinct combinations as seen above.

As it turns out, it is far more computationally efficient to store all 256 combinations in lookup tables (arrays of edge and triangle indices), as described in possibly the most famous post on this topic, by Paul Bourke – Polygonizing a Scalar Field. The ideas and lookup tables from Paul Bourke’s site have been recycled ever since they came to be. According to some sources, this implementation of the Marching Cubes algorithm is regarded as the most copy-pasted code of all time.

After a proper surface-cell intersection is found, the positions of the triangle vertices are then linearly interpolated with respect to field values at the surrounding grid points.

Since the Marching Cubes algorithm has been implemented so many times it will save anyone a lot of time to just use a particular working implementation

For example, if you’re most familiar with with coding your CG stuff in three.js (which was my particular case), feel free to use this implementation as an example to build upon.

There is also a possibility to use voxel rendering. Voxels are, just like pixels, portions of space which we fill with values (e.g.: color intensity). A cube cell of a grid with size is a voxel, and if the field values of its surrounding vertices lie somewhere between above the iso-level and below it, this gives us an opportunity to ditch searching through large edge tables interpolating on the edges, and instead reduce the cell intersection problem to just 21 combinations.

This method produces a really cool rasterization effect, the same as when constructing a terrain in Minecraft. It is sometimes referred to as Voxelization or Voxel rendering. There are multiple ways to do this, but only a few work together with the polygonization approach. Some use a field of booleans instead of floats, and either completely ignore the neighboring cells or use a form of culling for determining which cell faces get constructed. An interesting example can be seen here.

However, when I wanted to add voxel rendering I had to completely re-invent a new lookup table, which took some time. Since there are only 21 combinations anyone can draw them on a single page within a few minutes, just thinking which cell vertices are “active” and what cell faces get activated in each case.

Generating Fields from Scene Objects

Those who build apps for users without any math background or even scalar datesets (e.g.: 3D medical data) available for rendering, need to find a way to make the rendered scalar field fully controllable. Using inputs other than implicit math formulas like (which produces a field whose iso-surfaces are concentric spheres, centered at

), is something not that widespread online.

Truth is that I had to staple together bits and pieces of ideas to form a coherent picture of what I wanted to achieve. For example Blender uses special types of objects that generate scalar fields whose iso-surfaces form something like extensions of geometric primitives (spheres, cylinders, capsules). This seemed very limiting and not very user friendly, since all “metas” could be generated using just these few specialized objects, and the user can only work with one meta surface with all the meta objects combined and then bake it to be able to create another such object. Maxon’s Cinema 4D, on the other hand, allows the user to insert an arbitrary geometric object inside a modifier which generates the field from all objects inside it.

Let’s consider field again, and what it really (mathematically) is. This function is the square of a distance function to a single point at the coordinate origin (think of Pythagoras’ theorem in 3D and the length of a triangle hypotenuse). By adding a single parameter

(radius) we get

which is a squared distance function generated by a sphere with radius

centered at origin.

A similar distance function can be derived from other geometric primitives, such as box, ellipsoid, capsule, cylinder, torus, etc. using just the primitive’s parameters (such as radius and height

for a cylinder). Some primitives (e.g. ellipsoid) have distance functions that cannot be exactly computed using a single formula. There are, however, many inexact approximations which work just as fast. Feel free to check out Inigo Quilez’s web to get some inspiration on how to implement distance functions to various geometric primitives.

That being said, one can then compute squared distances to geometric primitives with given parameters for every grid point .

Combining Meta Objects

Generating a single meta-object is easily done using the distance functions at our disposal. However, such object would be static, it would only morph by the user changing the isolevel or cell size, but it wouldn’t combine with other such entities.

The preferred implementation of a combined scalar field depends on the marching cubes implementation itself. For example in an implementation directly from three.js examples (inspect marchingCubes.js) we notice that all metaballs are essentially point sources generated by a formula

where stands for field strength and values

and

correspond to cutoffs in order to get rid of a singularity and distant field values respectively. This is essentially a form of an inverse square law

. Another possibility is using something like an older Stemkoski’s implementation which uses something similar to a 3D Gaussian:

where

is the distance to the ball center.

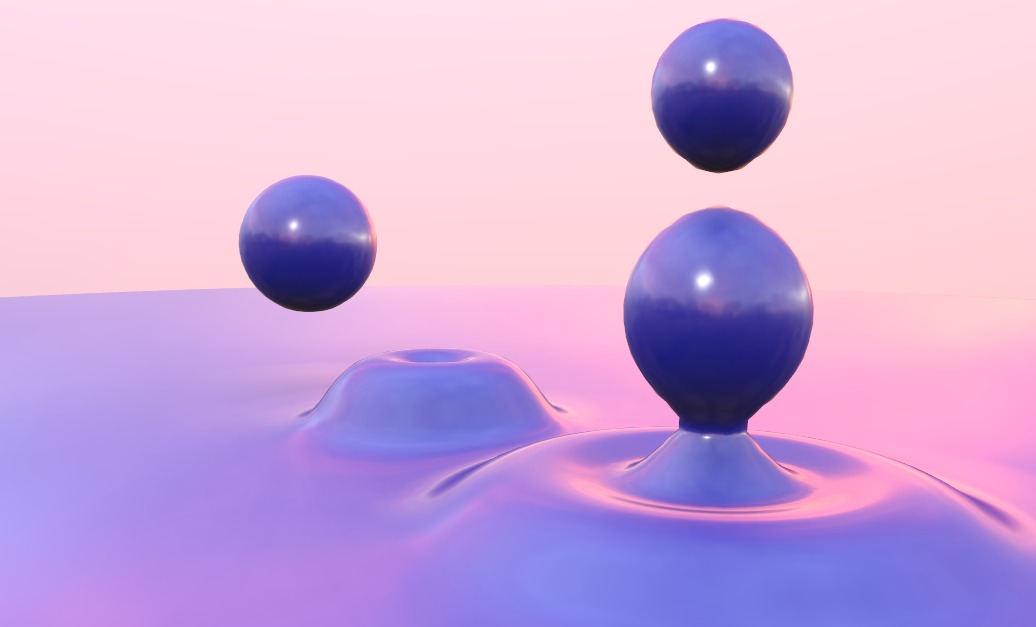

The main advantage of such fields is that they exhibit what physicists call a superposition principle, i.e. that the value at each point is determined by a sum of fields generated by all sources. As we can see in the images below, it works much better with an inverse-square law formula:

sum of raw distances implementation

sum of inverse square law expressions implementation

If we wanted to use just distance functions and

to two distinct objects, adding them would not produce desirable results. Neither would adding their limited representations

, where

.

Adding two inverse-square formulas produces what we can easily call a metaball effect. There are, of course, other ways to combine distance functions.

Another issue that has to be dealt with when dealing with distance fields generated by geometric objects is transformation.

since we do not have infinite storage space, the whole grid has to be limited. Ideally, the grid should contain a box-like space with some offset (max distance of an isosurface) with all geometries inside. One can then discretize the box into regular cells, or choose to use a global grid independent of the position of the bounding box.

We can then compute the box origin using positive and negative index counts ,

and

where

and

are the minimal and maximal vertices of the bounding box. We use the same formula for

and

(for those not familiar with the notation, operators

and

stand for

floor and ceil respectively).

A grid point can then be defined as .

Adding more objects into the meta-object modifier will affect a smaller region of the global box – a sub-box containing the added object. Grid points within the sub-box can be inverse-transformed by the inverse of the object’s local transformation matrix (within the modifier’s coordinate system). This is equivalent to transforming the object generating the distance field.

This can have undesired effects when applying uneven scaling on the object. While the object gets stretched in one direction, the grid contracts, hence the field is less dense in the direction of stretching.

A possible solution is to decompose the object matrix and only use the inverse of an unscaled matrix

and use the components of

to adjust the primitive’s parameters (e.g.: box dimension).

Blob from Any Object

Using basic primitives to generate blob surfaces sounds like fun, but with that off the table, we are still limited to only a small set of shapes. Can we generate a meta from any object?

Any object that gets rendered to a scene is either an image or made of triangles. Using a specialized distance function to an arbitrary triangle in 3D space we can simply compute a distance function to a triangle mesh for each grid point, by choosing the closest triangle. But to find the closest triangle to a point we would need to compute the distance to all of them. This means that even for medium-sized grids, distance functions to objects containing more than a handful triangles will be very slow to compute.

As it turns out, there are many interesting ways to solve this performance problem. I will write more about the solutions in my next post.

Aside from that, I welcome any and all constructive insights (in the comments) on how to solve problems like these, as well as questions in case something’s unclear to you.

Anyways, I hope you guys learned something new, and to those of you who want to start implementing the ideas, I wish happy coding!

Oooo, velmi dobre ! Dňa pi 27. 8. 2021, 16:50 Martin Cavarga napísal(a): > mshgrid posted: ” A Possible Resolution…

very nice publish, i definitely love this website, keep on it